I spent some time looking at which open source packages have not been maintained or updated, and how depends on those packages. The answer is YOU :)

I really like this quick Reagent query as an example. There's three hundred and fifty Pip packages in the top 5000 Pip packages with no updates since 2020? Perfect for JiaTaning!CyberSecPolitics

Saturday, April 20, 2024

What Open Source projects are unmaintained and should you target for takeover ?

Thursday, April 18, 2024

The Open Source Problem

People are having a big freakout about the Jia Tan user and I want to throw a little napalm on that kitchen fire by showing ya'll what the open source community looks like when you filter it for people with the same basic signature as Jia Tan. The summary here is: You have software on your machine right now that is running code from one of many similar "suspicious" accounts.

We can run a simple scan for "Jia-Tans" with a test Reagent database and a few Cypher queries, the first on just looking at the top 5000 Pip packages for:

- anyone who has commit access

- is in Timezone 8 (mostly China)

- has an email that matches the simple regular expression the Jia Tan team used for their email (a Gmail with name+number):

MATCH path=(p:Pip)<-[:PARENT]-(r:Repo)<-[:COMMITTER_IN]-(u:User) WHERE u.email_address =~ '^[a-zA-Z]+[0-9]+@gmail\\.com$' AND u.tz_guess = 8 RETURN path LIMIT 5000

This gets us a little graph with 310 Pip packages selected:

One of my favorites is that Pip itself has a matching contributor: meowmeowcat1211@gmail.com

Almost every package of importance has a user that matches our suspicious criteria. And of course, your problems just start there when you look at the magnitude of these packages.

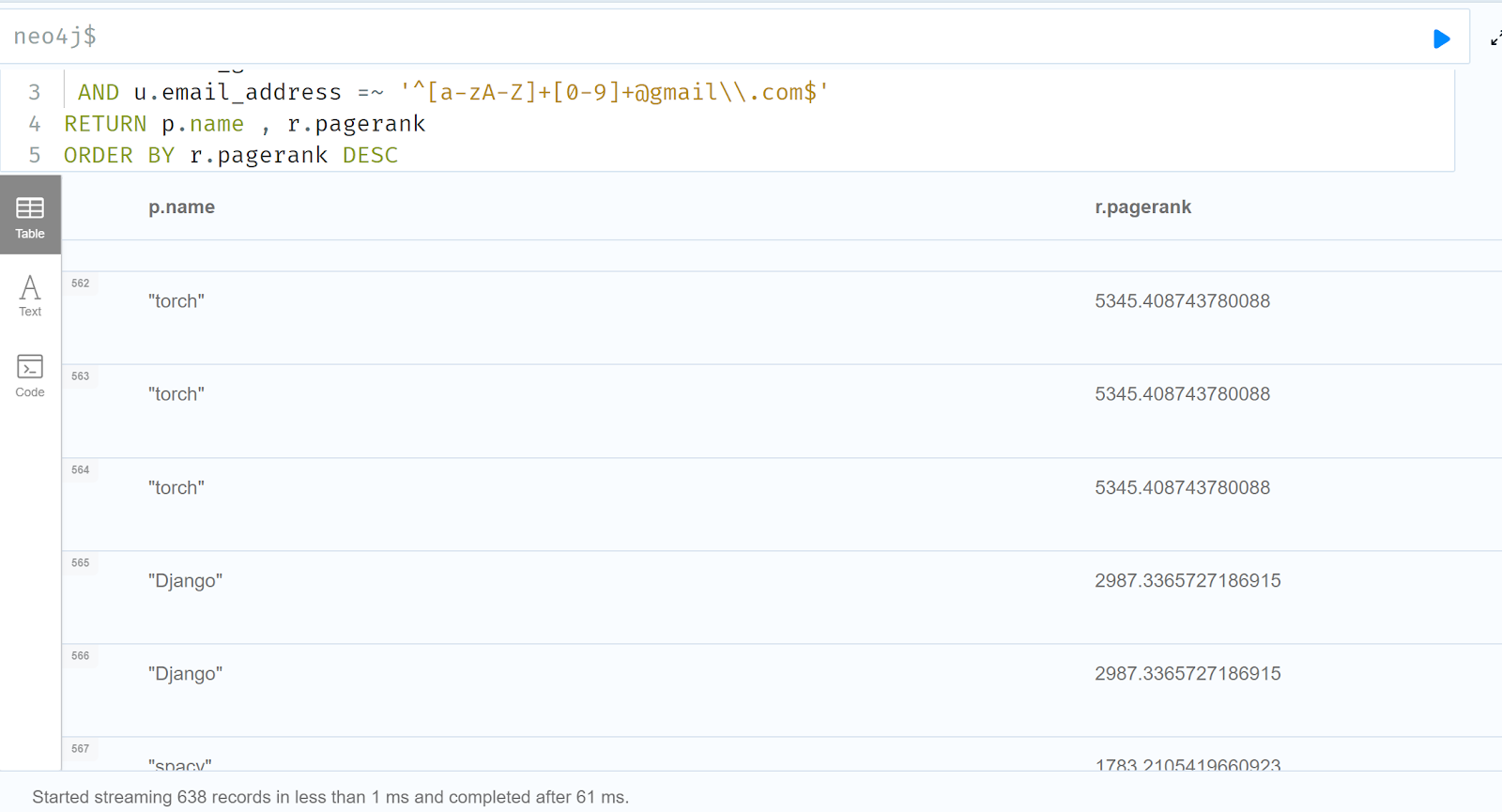

You can also look for matching Jia Tan-like Users who own (as opposed to just commit into) pip packages in the top 5000:

MATCH path=(u:User)-[:PARENT]->(p:Pip)<-[:PARENT]-(r:Repo)

WHERE u.tz_guess = 8

AND u.email_address =~ '^[a-zA-Z]+[0-9]+@gmail\\.com$'

RETURN path

ORDER BY r.pagerank DESC

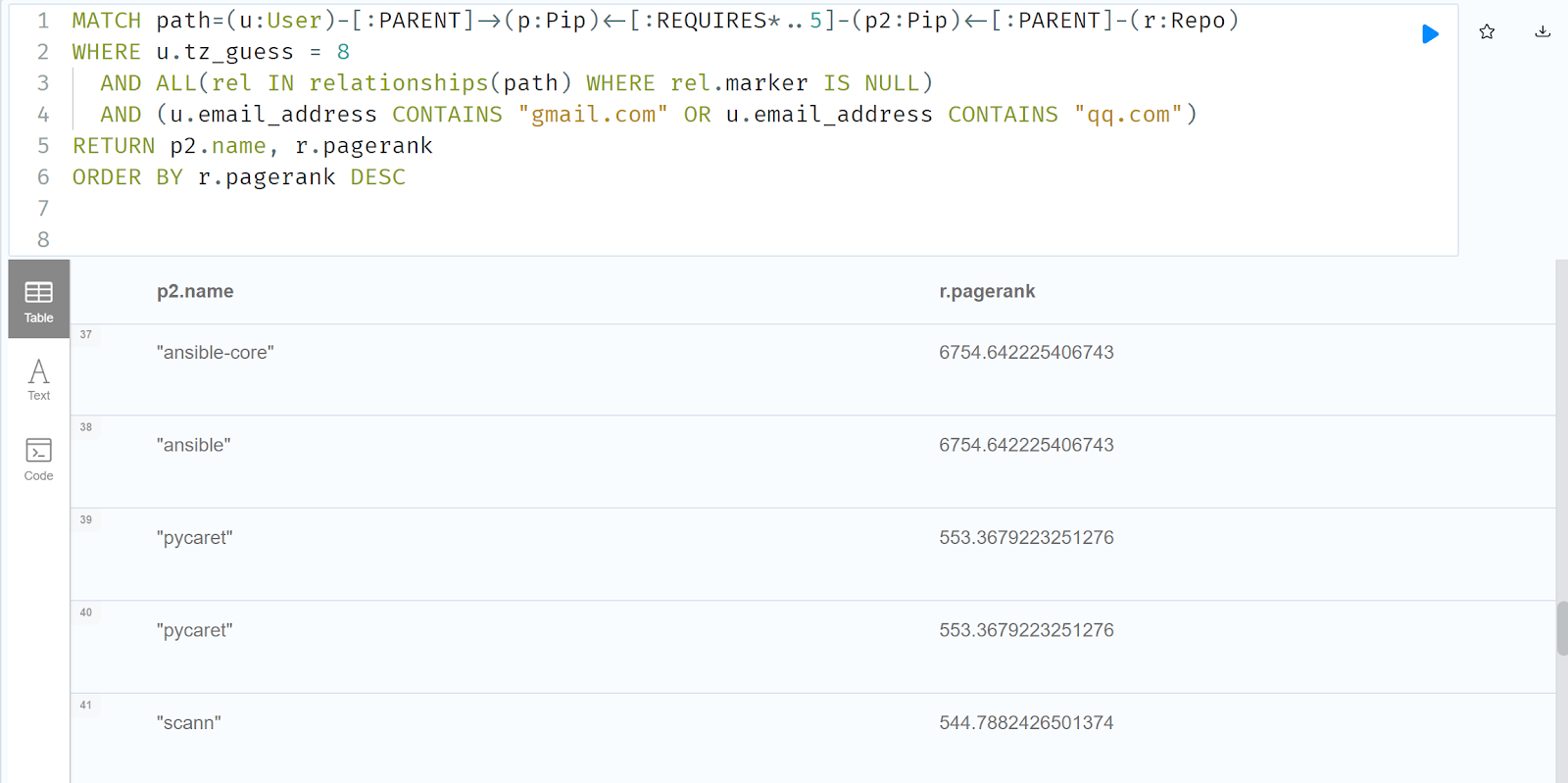

On the other hand, many people don't care about the particular regular expression that matches emails. What if we broadened it out to all Chinese owners of a top5000 Pip packages with either Gmail or QQ.com addresses and all the packages that rely on them. We sort by pagerank for shock value.

MATCH path=(u:User)-[:PARENT]->(p:Pip)<-[:REQUIRES*..5]-(p2:Pip)<-[:PARENT]-(r:Repo)

One of the unique things about Reagent is we can say if a contributor is actually a maintainer, using some graph theory that we've gone into in depth in other posts. This is the query you could use:

Ok, so that's the tip of the iceberg! We didn't go over using HIBP as a verification on emails, or looking at any time data at all or commit frequencies or commit message content or anything like that. And of course, we also support NPM and Deb packages, and just Git repos in general. Perhaps in the next blog post we will pull the thread further.

Also: I want to thank the DARPA SocialCyber program for sponsoring this work! Definitely thinking ahead!

Wednesday, April 3, 2024

Jia Tan and SocialCyber

I want to start by saying that Sergey Bratus and DARPA were geniuses at foreseeing the problems that have led us to Jia Tan and XZ. One of Sergey's projects at DARPA, SocialCyber, which I spent a couple years as a performer on, as part of the Margin Research team, was aimed directly at the issue of trust inside software development.

Sergey's theory of the case, that in order to secure software, you must understand software, and that software includes both the technical artifacts (aka, the commits) and the social artifacts (messages around software, and the network of people that build the software), holds true to this day, and has not, in my opinion, received the attention it deserves.

Like all great ideas, it seems obvious in retrospect.

During my time on the project, we focused heavily on looking at that most important of open source projects, the Linux Kernel. Part of that work was in the difficult technical areas of ingesting years of data into a format that could be queried and analyzed (which are two very different things). In the end, we had a clean Neo4j graph database that allowed for advanced analytics.

I've since extended this to multi-repo analysis. And if you're wondering if this is useful, then here is a screenshot from this morning that shows two other users with a name+number@gmail.com, TZ=8, and a low pagerank in the overall imported software community who have commit access to LibLZ (one of the repos "Jia Tan" was working with):

There's a lot of signals you can use to detect suspicious commits to a repository:

- Time zones (easily forged, but often forgotten), sometimes you see "impossible travel" or time-of-life anomalies as well

- Pagerank (measures "importance" of any user in the global or local scope of the repo). "Low" is relative but I just pick a random cutoff and then analyze from there.

- Users committing to areas of the code they don't normally touch (requires using Community detection algorithms to determine areas of code)

- Users in the community of other "bad" users

- Have I Been Pwned or other methods of determining the "real"-ness of an email address - especially email addresses that come from non-corpo realms, although often you'll see people author a patch from their corpo address, and commit it from their personal address (esp. in China)

- Semantic similarity to other "bad" code (using an embeddings from CodeBERT and Neo4j's vector database for fast lookup)

You can learn a lot of weird/disturbing things about the open source community by looking at it this way, with the proper tools. And I'll dump a couple slides from our work here below (from 2022), without further comment:

Wednesday, June 15, 2022

The Atlantic Council Paper and Defending Forward

One thing I liked about the new Cyber Statecraft paper is it had some POETRY to the language for once! Usually these things are written by a committee that sucks all the life out of it.

I have a number of thoughts on it though.

First of all: Defend Forward is not the totality of the shift in thinking that is happening out of the DoD, which is more properly labeled "initiative persistence" or "persistent engagement" perhaps? There's a LOT to it, of which Defend Forward is a tiny tiny piece.

"A revised national cyber strategy should: (1) enhance security in the face of a wider range of threats than just the most strategic adversaries, (2) better coordinate efforts toward protection and security with allies and partners, and (3) focus on bolstering the resilience of the cyber ecosystem, rather than merely reducing harm."

It is EXTREMELY weird that we can plan for Finland to join NATO in a conventional sense but not a cyber sense - it feels like there is maybe a gap in terms of our coordination with NATO and other allies - meaning we don't properly understand how to project a security umbrella yet/still. Coordination is hard in cyber.

So it's difficult to disagree with these terms on their face - the evidence says that yes, low level behavior (ransomware) IS STRATEGIC - and, yeah, we treat it strategically. Coordination with allies always sounds good and "more partnering" does as well. Having more resilience is never a bad thing, right?

But a critique could easily be: Adding resilience to the internet is often about doing REALLY HARD THINGS. It is not about making VEP choices, the way some would want us to think it is.

It is more likely to be instituting 0patch and deny listing various untrustworthy software vendors across all US infrastructure, forcing Critical Infrastructure to use a Government EPS, and otherwise doing really unpopular things.

The FBI has been going around removing botnets. How can we get PROACTIVE ABOUT THAT - installing patches before systems even get hit? That's adding resilience.

I mean, looking at this all through a lens of counterinsurgency is not necessarily new: https://seclists.org/dailydave/2015/q2/12

Nobody has the illusion that we are going to achieve cyber superiority, like we got air superiority during the Iraq war. That's by definition not what initiative persistence is about!

Defend Forward itself can be instantiated in a few different ways, i.e. teams going out to help people and collect implants on Ukrainian systems, us hacking things we think someone else is going to hack, us hacking or using SIGINT/HUMINT on cyber offensive teams themselves. Literally none of these things require or assume cyber superiority.

US policy is on two potentially divergent paths: one that prioritizes the protection of US infrastructure through the pursuit of US cyber superiority, and one that seeks an open, secure cyber ecosystem.

These paths are not opposed - we use defend forward in many cases to ENSURE a open and interoperable network - one that supports our values

It's also extremely hard to judge the value of these operations via public record. When they work, they largely remain covert, or occluded at the least.

While the author claims that Defend Forward is most useful against strategic adversaries - this is probably very not true as they are hardened targets. It's more that defend forward is a high resource requirement activity, for the large part. So you use it when you MUST not just when you CAN.

"This is not to say that Defend Forward is a bad strategy so much as it is not a strategy on its own and not a means of fully realizing the goals of the current US cybersecurity strategy. Indeed, its place as the paramount concept of US cyber strategy is in tension with broader US objectives of a secure and stable cyberspace."

Yeah I don't think anyone thinks Defend Forward is the whole breakfast.

But making our goal "A secure and stable cyberspace" is like making our goal "A secure and stable and prosperous Afghanistan" . This is basically an argument for massive ongoing subsidies from the US taxpayer to someone else, without end.

"The Command Vision for US Cyber Command explicitly focuses on the actions of Russia and China, and relegates its considerations of a broader set of adversary operations impacting overall economic prosperity to a footnote"

Another way to put this is Cybercom is a STARTUP and doing their best with limited resources.

"The next US Cyber Strategy should take account of ongoing policy changes and redouble efforts to support public-private partnerships investing against capabilities and in infrastructure rather than just response. To aid smaller, less well-resourced companies, the US government should fund security tooling access and professional education for small-to-medium enterprises (SMEs) while working to improve the size and capacity of the cybersecurity workforce at a national scale. There have been several legislative efforts to effect such a change: HR 4515, the Small Business Development Center Cyber Training Act36 and the cybersecurity provisions within HR 5376, the Build Back Better Act.37 In addition, further legislation is required to make permanent the cybersecurity grant program under the recently passed infrastructure bill (Public Law 117-58) with the added guidance from the Cybersecurity and Infrastructure Security Agency (CISA).38"

Like, whatever. These are just random subsidies that don't help. If we have money sloshing around, then they probably don't hurt too much either. What we heard on the CISA call was that smaller CI companies basically want the government to take over their security responsibilities. But this is a huge deal. It's not something we can just do. Security is built into how your run your whole company.

"CISA, in cooperation with its Joint Cyber Defense Collaborative (JCDC), the Department of Justice, and the Treasury Department, should compile clear, updated guidance for victims of ransomware, including how victims unable or unwilling to make ransomware payments can request aid from the Cyber Response and Recovery Fund.39 Further legislation should focus on federal subsidies for access to basic, managed cybersecurity services like email filtering, secure file transfers, and identity and access management services."

Did a managed service provider write this? This is a very weird call for subsidies. Maybe instead the USG should make large software vendors not charge more for security features than for the base product?

"Adversaries, knowing this point of friction, would then benefit from moving through this grey space, pairing their operational goals with the strategic impact of forcing the United States to move against the interest of US allies."

I feel like whoever wrote this line has a very limited understanding of OCO. It's not wrong that the very idea of hacking random German boxes annoys the Germans. But that calls for responsible OCO efforts and communicating what those are. And of course, not all Defend Forward is OCO.

"This means a shared, or at least commonly understood, vision for the state of the domain, as well as agreement and understanding as to the acceptable methods of operation outside a state’s “territory” and through privately owned infrastructure."

In other words, wouldn't it be great if there was a norm against hacking certain things? Well....maybe! But this is an unreasonable ask. We are not getting real norms any time soon.

Similarly this kind of language is unrealistic:

United States Cyber Command (CYBERCOM) should coordinate explicitly with the defense entities of US allies to set expectations and parameters for Defend Forward operations. These should include agreed-on standards for disclosure of operations and upper limits on operational freedom to an appropriate degree, recognizing that such decisions are rarely black and white. Similarly, DoD should work with CISA’s JCDC to coordinate its offensive action with the largest private-sector entities through whose networks and technologies retaliatory blows, and subsequent operations, are likely to pass. This coordination should strive to establish a precedent for communication and cooperation as possible, recognizing the significant effect that offensive activities can have on defenders.

Cybercom should coordinate with Amazon and Microsoft because of potential retaliation? This is nonsense. It is someone hoping to kill a program that works and delivers results. Perhaps I'm being overly harsh - no doubt DoD already has relationships with these entities and does work through them in various aspects. But that doesn't mean they should have a veto or even pre-warning on various engagements. It's definitely true that taking side effects into consideration is a big part of being responsible when you do OCO in general (and defend forward in specific), but it's unlikely we are in a position now to have conversations about agreed-upon norms for these operations.

"However, the fallout from the incident also inspired questions about the apparent paradox of securing cyberspace by preparing weapons to compromise it.60"

We don't use these tools to "secure the internet". We use them to gather intelligence and help secure our nation.

"While there is language about the importance of improved ecosystem resilience throughout US cyber strategy documents, this topic deserves far richer treatment than a framing device."

If we actually wanted to improve the ecosystem, we would have explained to people that running VPNs that have Perl on them was a bad idea. We would stop using Sharepoint. We would publish the penetration testing results into a lot of modern equipment and let the transparency kill them off...

But this is politically impossible.

"A strong example of this would be public-private investment in memory-safe code that can reduce the prevalence of entire classes of vulnerability while providing the opportunity to prioritize mission-critical code in government and industry."

Yeah.....like why does the public need to invest in this. Rust exists. Java exists and the USG is one of the biggest developer shops for it. What are they trying to get USG to pay for exactly?

There's also a lot in this paper about giving more jobs to CISA JCDC which already has a ton of jobs. It cannot do everything.

"As the United States redevelops its national cyber strategy, the question of overall political intent must stand at the forefront. This strategy needs to clearly address the dissonance between the stated policy goals of protection and domain security—a tall order, but a feasible one. Proactive offensive cyber operations that protect US infrastructure and interests are, and will continue to be, necessary. But just as in counterinsurgencies of the past, the United States must ensure that it does not fall into a “strategy of tactics,”66 losing the war by winning the battles."

We should at least explain things better to our allies. That much is true. They possibly are really confused and confused means annoyed. But the rest of this paper is a lot of tilting at a strawman argument nobody in the DoD or elsewhere has put forth.

Monday, April 18, 2022

FORECASTING

The news is filled with cyber hot takes on Ukraine. As someone said to me a few decades ago though - "When it's in the news, it's operations. Our job is the future." And at some level, the war in Ukraine has been stamped out already in the astonishing fortitude of Ukraine, economic and political realities, and the also frankly mind-blowing efforts of various intel groups, only visible with the right set of binoculars.

One thing I struggle with when Forecasting, actually, something I see everyone struggle with, is that we don't forecast our own efforts very well. Nobody predicted we would drop a ton of highly sensitive information out into the NYT regarding Russian war plans. And if you didn't predict that (or worse, didn't notice it while it was happening), you missed a major strategic development.

A lot of the rest of it, cyber attacks on critical infrastructure networks, drone usage, face recognition being used for psyops, was easy to predict, but not as interesting other than for policy papers crowing about being correct in various journals (or, ironically, claiming coup for incorrect predictions and assessments).

Was it predictable that the Ukrainians would lap the Russians at social media information ops? I think it was, and I think the Russians would be the first to admit it was, when being honest to themselves.

But we do have conflicts closer to home. I want to say this only once, because it is a worry that not only I hold, but that nobody I know can say out loud: I worry about US.

Every recent science fiction novel has talked about a United States split to some degree along ideological grounds and I worry more about the Court's decision in June on abortion than I do the Russian conflict. You should too, and I want to illustrate why with a little sample from my neighborhood.

I took my kids to the local graveyard, a short walk away, in Wynwood, an "up and coming" neighborhood in Miami, famous for its art galleries and fine dining. It is an old graveyard by US standards.

Thursday, December 30, 2021

Guest Post: HostileSpectrum’s futures: Looking back on 2021’s estimative signposts

We are all familiar with the constant flurry of predictions for the coming year that flood our inboxes around this time, where vendors and their marketing teams all compete for decisionmaker attention as folks take stock of where their organizations have been, and where they are going. In their best forms, such products are supposed to be formal futures intelligence estimates – crafted through deliberate tradecraft in which novel hypotheses are weighed by experienced analysts, supported or challenged based on unique collection, and tested through structured methodologies. In industry, delivering such finished intelligence (FINTEL) was originally intended to support decisionmakers setting strategy and investments for the new year, or at least considering the stance by which they would approach the problems coming down the line they had not yet anticipated.

Like many things in the cyber threat intelligence business, the annual estimate has been copied in form without consideration of function. Along the way, it is bastardized by pressures of marketing teams which serve as both production requirement and funding lines for all too many intel shops, but introduce unique analytic pathologies to the process. Our community increasingly abandons established analytic methodologies in favour of single point predictions relying solely on “expert” judgement. Needless to say, this is generally not how good intelligence is done.

Recent years have taken this to a breaking point of absurdity. We were fortunate, then, to be able to laugh in the face of the absurd. Kelly Shortridge showed us all the way, letting a Markov generative text take over one year. While almost certainly lightly edited for human readership, the piece was not only quite funny in its own right, but a biting observation of what had become formulaic repetition of evergreen tropes devoid of thought and comfortably numbing in presentation of the familiar. Of course, this hit in a year when the world we knew was reshaped.

But when the laughter dies away, what are we left with? A void, into which the same empty imitations of FINTEL are poured, and that continues to stare back at practitioners and policymakers alike. In the darkness of the long winter’s evening, this challenges us to do better.

Having stepped away from the production line of the intelligence machinery, and eschewing for this purpose the archaic rituals of academic publishing , one naturally turns to the medium of the age. As revolutionary as the blog format has been to the intelligence communities of practice (sufficiently so as to both result in an unusually well popularized effort anchored from an early paper in what was the most secretive of environments), the maintenance and sustainment of lightweight publishing platforms in an ecosystem overrun by parasites and cannibalized by the major platforms has left only a few remaining bastions of both longform and relevant thought.

Last year, I published my own yearly predictions, attempting to break the analytical mold, on Twitter. They caused, for what it is worth, somewhat more of a stir than I expected, but analysis is only as good as it is re-examined, as we do below.

There are distinct limitations to the Twitter format, to be sure. Analytic nuance is lost, supporting lines of argument and foundational evidence are nearly obscured. Even estimative language may be curtailed, if one is not cautious. All that is left is what would effectively be the key judgement (KJ) bullet points in a finished intelligence product.

It is for this reason I argued for years against attempting to publish to consumers in this way, due to the expectations that weigh upon intelligence as an organization. The irony of doing so now is not lost on me. If it had been, I am sure many of my friends and colleagues would continue to remind me. Delivering only KJs is particularly challenging in futures intelligence estimates, where the bulk of the value of a product is actually found in the reasoning about trends, drivers, and the processes of their interactions.

Thus it is more appropriate to consider each tweet not a KJ, but rather an estimative signpost – a marker in the unknown stream of future time, around which one may see the flow of present uncertainties as they may yet manifest, or divert. The process is much like casting stones into the water, where attention is paid as much to the ripples out from the initial point of impact.

But looking back, how do the estimative signposts in last year’s Tweet storm of predictive analysis hold up? This is for the reader to judge. But it is worth laying out the case here. Note that the following is slightly re-ordered from the original thread, to link discussions across observed issues.

On medical intel / care target breaches, and political hack & leak objectives

Adversaries indeed discovered the utility of compromising private medical information for political pressure. The Iranian attributed Black Shadow operations against Israeli medical targets are among the most visible of these developments. Additional criminal extortion actions against other medical services providers have also surfaced material offering potential political leverage, but it remains unclear the extent to which hostile services have been accumulating this material in circulation, or in private transactions.A few estimative signposts for debate re cyber conflict in '21 + 6 months (span of Moore). Thread follows in no particular order

— HostileSpectrum (@HostileSpectrum) December 31, 2020

-Major breach of medical intelligence &/or care targets will be found. First adversary use will be in hack & leak operations with political objectives.

This CONOPs has not yet however extended to high profile leadership (at least as far as publicly known to date). Such extension is in some ways likely inevitable in an aging West, where the longevity, vigour, and even competence of major political figures is subject to frequent speculation. Yet the value of such privately held knowledge, particularly in times of crisis, remains a substantial inhibitor for random disclosure – as is the likelihood of reciprocal disclosures more likely to call into question the control that may be exerted by the heads of authoritarian regimes.

The health of leaders will of course remain a substantial intelligence target (as Rose McDermott and Jerrold Post have each written about). And the impact of selectively timed disclosures will almost certainly continue to remain problematic for societies unable to adapt to the pressures of adversaries’ deliberate active measures. Even if the adversary never chooses to actively leverage such espionage take for influence operations campaigns, the value of stolen medical intelligence may nonetheless remain substantial in allowing hostile services - and competing states’ decisionmakers - to focus on the leaders they are more likely to be dealing with over the longer term. Substantial advantages also accrue here in positioning for the turbulence of unexpected political transitions caused by illness or incapacity.

Stunning 0days disclosed with metronomic regularity

*Stunning game-over 0days will be disclosed with metronomic regularity in foundational elements of multiple ecosystems, but largely ignored due to complexity of vulns, scope & impact of required remediation, & inability of legacy vendors to detect exploitation ITW.

— HostileSpectrum (@HostileSpectrum) December 31, 2020

There is no question that 2021 saw the exploit treadmill running faster than enterprises or even the best individuals in our field could keep up. For each major bug disclosed, the rotten wood of decayed legacy software beneath yields additional exploitation value. And our adversaries have not only noticed, but seem to be pressing ever faster on these rapidly collapsing attack surfaces. Each of the stunning bugs of ’21 indeed only served as blood in the water, calling in predators for the feeding frenzy. We still have not yet come to terms with highly parallelized, independent threat evolution across multiple actors as a result of these events. Nor are we cogent what this means for ever more exhausted defenders.

On the tarnishing of myths regarding US, FVEY offensive dominance

*Offensive talent proliferation will notably enhance lower tier programs towards a common generalized mean in stock target environments. Higher end capabilities will increasingly be something of a separate grammar, provoking difficult debates between shops following only open

— HostileSpectrum (@HostileSpectrum) December 31, 2020

*Myth of US, FVEY unique offensive dominance will be further tarnished, if not shattered. Multiple policy proposals pre-supposing Western "original sin" in CNE, other OCO will nonetheless be advanced undaunted as if correlation unchanged. To snickers & encouragement by adversary

— HostileSpectrum (@HostileSpectrum) December 31, 2020

One remains skeptical of comparative capabilities evaluation rankings, despite multiple attempts by different parties to establish varying indexes. The continued consensus that the US and Five Eyes allies remain firmly ensconced at the top of these rankings must also likewise be looked at with appropriate caution. We may in the first instance question entirely the character of offensive advantage in the domain, as my friend and colleague Jay Healey does. We may also consider capabilities demonstrated by conspicuous display, as in the profligate burning of bugs on parade at the Tianfu Cup and other recent events hosted in China. One must be cautious not to measure only what has been caught, because here it is the things that are not seen that define the highest end edge of the capabilities spectrum. It is to be hoped that there remains stunning, game changing portfolios held in the reserve somewhere in the dark of a closely held allied program.

But that is increasingly not the impression conveyed by those in the US government, or among allies. When a senior intelligence community official acknowledges publicly that the US now must become fast followers, we have reached a tipping point. Yet it may still take some time for this awareness to ripple through the policy community, let alone to influence its engagement with scarce technical talent and the fragile engines of capabilities development.

On offensive talent proliferation, and automated exploit development

*Evidence of automated exploit development will emerge. In a much lower tier adversary than expected. Because the higher end programs that have adopted these capability generation approaches will still be at it, and not getting caught.

— HostileSpectrum (@HostileSpectrum) December 31, 2020

Red sourcing and other commodity acquisitions strategies do indeed continue to have notably dominated lower tier programs, and served to create a generalized baseline mean for intrusions leveraging all the usual implant and infrastructure tooling. Proliferation was amply demonstrated not only in direct movement of talent, but in the disclosure of playbooks and associated process tooling. It was almost certainly not the first time that adversaries had seen each other’s operator checklists, and the development of formalized stepwise action models serves to diffuse knowledge within larger numbers of less experienced cadres with reduced initial training and education burden. Quality does suffer, but as always only needs to remain “good enough” against the class of targets to be serviced. Which rapid programmatic expansion defines in part at lower thresholds of sensitivity through its own scaling. Hit enough things, and an intrusion set’s quantity of accesses has a quality of its own.

Higher end capabilities indeed continue to remain a separate grammar, to the point that even when publicly disclosed they go largely unexamined. There are rare exceptions, and at some delay, such as the over ten month lag in public analysis of the stunning FORCEDENTRY iOS exploit – but it is the exceptions that prove the rule.

Evidence of automated exploit development remains more elusive than expected, at least insofar as the public record has established. Lower tier adversary interest continues to be observed, but it remains unclear how many programs have effectively integrated these approaches into their capabilities development processes. The leap from mere fuzzing to a more sophisticated operational use of modern program analytic technologies seems to be for some teams harder than they anticipated.

On disclosure, VEP, & exploit portfolio sales, & export control

*Debates over disclosure, VEP, & exploit portfolio sales still won't die. Export control proposals will still waste everyones time, energy, but rack up billable hours for lobbyists & lawyers.

— HostileSpectrum (@HostileSpectrum) December 31, 2020

Commerce snuck its rulemaking on export control in before ’21 ended, only to see resounding silence in part because this only really bubbled up during the holidays but moreso in the otherwise largely rational response by large organizations already under substantial threat pressures to ignore this as one more government imposed paper exercise as meaningless in its implementation as it is voluminous in its word salad. Yet this merely defers reckoning to another day, and compounds billable hours for those lobbyists and lawyers as things come into effect, regardless of industry feedback. The policy community continues the unfortunate trend of treating 0day like they are only found in the US, when evidence mounts that the locus of real action has moved elsewhere. In this, other states are increasingly choosing to exercise stronger controls – not out of altruistic motivations, to protect the wider ecosystems or even to regulate negative externalities of vulnerability markets – but rather to better control early access and first mover advantage when presented with valuable portfolios. Any illusions of a Chinese government VEP policy similar to the one in US and Allied states were also very much shattered, and no one expected even the semblance of such a thing from Russian, DPRK, or Iranian offensive cyber programs.

On lethal outcomes from offensive cyber effects

*Offensive cyber ops with lethal outcomes will again happen. They will again be ignored as higher order effects that can't be quantified, conclusively traced, or narrated simply enough for the compromised attention spans of those doomed to forget before the next time.

— HostileSpectrum (@HostileSpectrum) December 31, 2020

The old tired debates continue. Those that understand dependencies, and higher order effects, felt all too keenly the weight of adversary action even as mounting morbidity and mortality data continued to be ignored. And it seems that within the span of the ‘21 estimate, if not the year itself, we may well once again see lethal contributions on foreign battlefields.

Inadvertent trigger of pre-positioned implants

*Long running intrusion set delivering OPE recon & implant options will be inadvertently triggered during period of heightened geopolitical tension. Attribution debate will be complicated by heavily entangled nth party access, litigation threats, competing press releases & leaks.

— HostileSpectrum (@HostileSpectrum) December 31, 2020

Thus far as publicly disclosed, unintended effects from the execution of implants intended for operational preparation of the environment have apparently not yet come to pass. For which we are thankful. But in multiple major crisis events, with immediate geopolitical (in the true international relations sense of that term) and other pol-mil-econ tensions, the potential for missteps by immature operators with poor oversight, limited process structure, and deeply entangled nth party access complications remains a serious concern through the estimative window. One would nonetheless continue to hope that this signpost remains wrong, and at the furthest edge of the possible.

On autonomous, wormable payloads

*An autonomous wormable payload will be discovered, with complex targeting logic & extensive modularity, only a fraction of which will be fully reversed or understood. But this will nonetheless be used in marketing materials for years to come.

— HostileSpectrum (@HostileSpectrum) December 31, 2020

Here, the distinctions between public knowledge and private intelligence holdings and researcher findings are substantially highlighted in the past year. We have seen multiple vulnerabilities in major targets that are manifestly suited to wormable RCE. Yet for some reason, there remains not only a reluctance to accept the potential for such outcomes, but even direct hostility to indications of adversary interest and development. The most recent of these cases of course being the Log4j bug, which devolved into debates over definitions of autonomy, and fundamental questions over the degree to which behavioral observables manifest in artifacts may be seen to demonstrate adversary intentions (alone, or in concert with other collection). If this is where the consensus knowledge of the year ended, there is limited prospect of taking up the other questions of worms that remain harder to find in constrained propagation dictated by complex targeting logic, and harder to reverse and understand (in the very modularity that makes such tooling powerful in application). One would have expected better from the community of practice, but such is where we are in the present moment.

On Russian espionage compromise of cloud targets, and other operations in major platforms

*Other shoes will drop re ongoing .ru espionage compromising cloud targets. The full details, actual timelines, & adversary intent will still not be established. But the case will be continue to be cited as both policy & sales narrative takes hold.

— HostileSpectrum (@HostileSpectrum) December 31, 2020

*Major operations conducted solely in platform specific environments will increase divergence between intel picture under tightly controlled NDA & common knowledge after being watered down by legal, PR. Resulting in only worse surprise, backlash in wake of future major incident

— HostileSpectrum (@HostileSpectrum) December 31, 2020

The full dimensions of adversary enablement operations, and compromise of key common dependencies across the ecosystems, remain very much unclear. The continued corrosion of an effective common intelligence picture as post-incident findings are redacted, minimized, or withheld degrades our assessments. In the absence of the kind of log and artifact observables that cyber threat intelligence practitioners are more used to working with, other collection activities and analytic techniques must be brought to bear. Where this is done, or not done, has resulted in a divide between camps that simply see the world differently – often as a result of their orientation towards offensive or defensive problems, and sadly as much due to anchoring on prior estimates not revised in the face of subsequent events. Narratives have indeed taken hold, and hardened, in ways that will complicate assessment of future problems.

In other words, the centralized control of the current dominant cloud platforms makes collaborative forensics analysis harder, and thus challenges our longer term strategic understanding.

On failure to warn

*Major operations conducted solely in platform specific environments will increase divergence between intel picture under tightly controlled NDA & common knowledge after being watered down by legal, PR. Resulting in only worse surprise, backlash in wake of future major incident

— HostileSpectrum (@HostileSpectrum) December 31, 2020

Failure to warn as a theory of liability did indeed become prominent in ’21, but from an admittedly unexpected source. It has long been understood that USG desires to regulate its way into visibility, if not centrality, during cyber incidents impacting private enterprises who see little value in engaging with a host of competing agencies and their component elements that provide no meaningful assistance, and only level further conflicting demands. Proposals to advance mandatory incident disclosure notification with increasingly (if not unrealistic) ambitious scope and timeline requirements still have not achieved legislative traction, although executive actions to implement similar obligations are accumulating across multiple sectors. Beyond regulatory demands, FBI has now advanced the theory, in comments contemporaneous to a superseding indictment in the matter of the 2016 Uber extortion case, that executives may be directly charged if firms do not provide information to the government, where there exists the possibility that such disclosures could have been leveraged for future warning to other victims. One expects other civil actions will rapidly follow – especially where multiple firms increasingly seem to assert that they need provide no disclosure to customers regarding implications for their products of even known vulnerabilities exploited in the wild; or any information regarding substantial intrusion incidents on their platforms, regardless of potential impact to the customers of those platforms. As usual, it seems these things will be tested not through cool rationality of policy debate and decision – but through the heated contests and random outcomes of the courtroom. The resulting precedents will inevitably lead to risk aversion, further overlawyering, and ultimately yet more disincentives for the private sector common intelligence picture.

On changing CTI production

*CTI embrace of journalism models as a substitute for analytic production will continue apace, further devolving towards clickbait. Other intelligence tradecraft standards will continue to be sacrificed quietly along the way as fewer analysts, managers are taught the difference

— HostileSpectrum (@HostileSpectrum) December 31, 2020

Shortly after a presently unthinkable breach of professional practices will be vigorously defended via some tortured logic as a matter of common groupthink, including prominent talks. Which will lead inexorably to generative neural network driven reporting to displace...

— HostileSpectrum (@HostileSpectrum) December 31, 2020

...first line FINTEL production, displacing billets & reducing roles in which collectors & analysts can grow into more seasoned, experienced professionals. But since so many spend their time as TwitterSOC or West Wing cosplayers, this subtle corrosion won't be noticed right away

— HostileSpectrum (@HostileSpectrum) December 31, 2020

The collapse of traditional media as its revenue sources are cannibalized by the advertising infrastructure underpinning the entirety of the technology ecosystem has displaced a lot of folks that string words together for a living. Many of these folks are used to doing so under deadlines, and with a focus on shorter and direct pieces. As intelligence organizations have long recognized, these are useful traits in a line analyst. However, these are very different professions, and the tasks of an intelligence professional are more than simply writing something the customer likes to read.*Driving emerging vendors new to market to offer increasingly loud, poorly sourced & badly supported takes that will be indistinguishable from bad Twitter drivel, but repeated verbatim by pool of tech reporters

— HostileSpectrum (@HostileSpectrum) December 31, 2020

Worse yet, where intelligence production is seen as useful to the organization solely as a means of generating marketing collateral that is then pushed in the hopes of generating positive media coverage, devolution to a more journalist friendly audience may creep in as a requirement. As we have seen, some shops have sought to cut out the middleman and directly hire former press talent not only in intelligence roles, but to support their own newly established “new media” model outlets. Such pseudo-journalism has in the past year very much challenged established analytic tradecraft standards, blurring the line between collection, analysis, and delivery.

This in turn spurred even further devolution in newly established shops where tradecraft remains apparently unknown. Suffice it to say such noise will always plague us, but there is an expectation that the market can be self-correcting. However, I routinely underestimate the longevity of mediocrity in this space.

Yet there is an absolute clock running, where the tipping point for new technologies to displace the labour intensive and talent specific tasks of much of cyber threat intelligence is ticking. There are interesting start-ups this year that seem poised to disrupt the space. And the volume of reporting generated from automated processes that practitioners at multiple levels regularly consume has been ticking up inexorably this year.

On economic espionage value in foreign technology production

The continued attempts to deny the military and economic utility of cyber espionage in cumulative effects remain puzzling. But Western awareness of PLA development and deployment of new systems that bear unmistakable lineage in compromised programs has lagged, as much due to deliberate attempts to avoid considering what this means for military budgets in a time when political leadership would prefer much deeper austerity. Yet the adversary not only gets a vote, but has set the meeting agenda.*Stakes will continue to be driven through heart of arguments re .cn integration of economic espionage take as ever larger number of deployed PLA systems will be seen directly incorporating elements unmistakably stolen from Western DIB. But debate will drag on for another decade.

— HostileSpectrum (@HostileSpectrum) December 31, 2020

It remains to be seen when this argument shifts. Perhaps when other self-delusions regarding broken promises of restraint are also abandoned, or perhaps it will require a flaming datum to illustrate the point.

On infosec cons

*Infosec cons will be surprised to find that in postpandemic world when travel is again possible large swath of orgs won't fund physical presence. Will take years for knockon to industry to fully manifest, but 1st impacts will be seen on job mobility & new entrant maturity curves

— HostileSpectrum (@HostileSpectrum) December 31, 2020

Pandemic travel restrictions not being over, the effect on the con scene remains as yet uncertain. The brief period of optimism of late summer and early fall ’21 does not provide sufficient basis for retrospective evaluation.

Having laid out the predictive record, warts and all, it is traditional to close with an exhortation to intelligence professionals to take up the burden to do better than what has been presented before. If one considers the analogy of casting stones, this is all perhaps just one more thing to be slung towards those that may take up the burden. As the old Greek inscriptions on sling projectiles read: “DEXAI” (Catch!)

I may well throw another volley of estimative signposts for the new year (plus 6 months, to account for the span of Moore’s Law), once again via Tweet storm, in the coming days. One nonetheless hopes to see further more formalized efforts, grounded in properly rigorous tradecraft, from other shops this year.

About the author: JD Work is a former intelligence professional turned academic.

The views and opinions expressed here are those of the author and do not necessarily reflect the official policy or position of any agency of the U.S. government or other organization.